Share

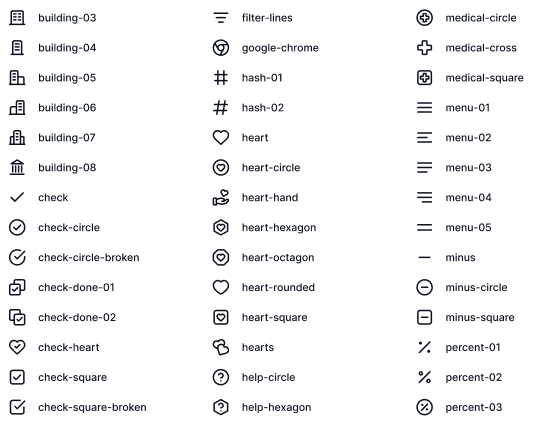

At Metronome, icons are an integral part of our user interface, appearing on almost every page in the product. You'll find them in buttons, headers, nav menus, tables, and more. Our old icon library was chosen when we were a much smaller company, primarily for speed of getting started. We used Ionicons, a set of 1,356 open-source, hand-crafted SVG icons.

But in October last year, we launched Metronome 2.0. That launch included a UI update, for which we created a new design system—complete with a new set of 1,173 custom icons that more closely matched our needs in the Metronome 2.0 world.

While this evolution in our design system was exciting, it presented us with a significant challenge: how do we transition from our old icon set to the new one, without disrupting our product or burdening our design and product engineering teams with a massive manual task?

The challenge

With over 1,300 icons in our old set and nearly 1,200 in the new one, manually mapping each icon would be a time-consuming and error-prone process. These icons are deeply integrated into our UI, and ensuring a smooth transition was crucial for maintaining the integrity and usability of our product.

Our goal was to find an automated solution that could intelligently match our old icons to their most appropriate counterparts in the new set, allowing us to programmatically upgrade icons across the product.

The solution

Attempt 1: String-similarity matching

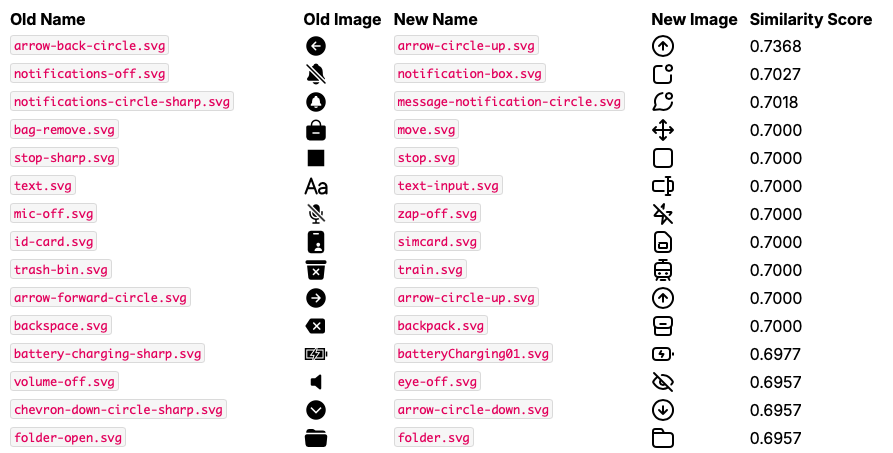

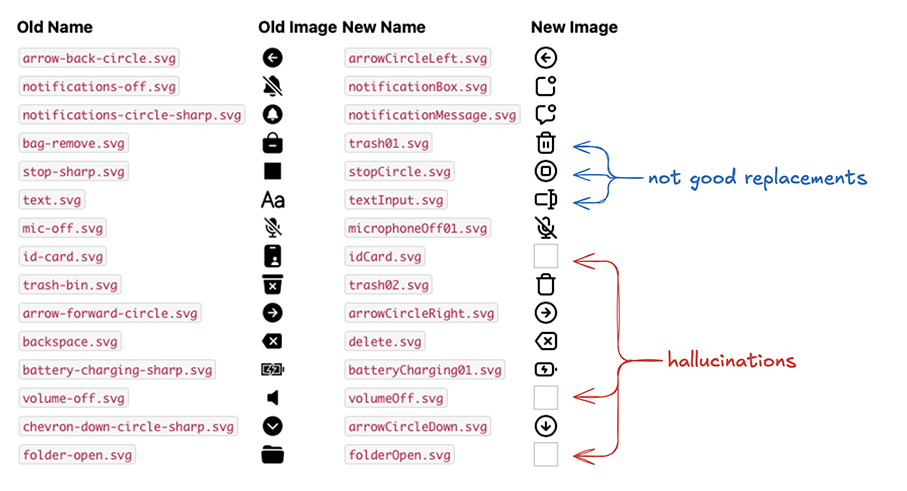

At first, we had hoped that the icons were named similarly enough that we could do a simple string-similarity matching, but this quickly proved not to work, even in simple cases like arrow-back-circle not mapping to arrow-circle-left or trash-bin mapping to train.

Attempt 2: Large language models

Our next approach was to try to use an LLM to capture semantic meaning (for example, that a back arrow should map to a left arrow). We tried several approaches, but found a single-shot approach with a large context window model (like Anthropic’s Claude) worked fairly well, even with a simple prompt.

Given the following lists of new and old icon names, return a JSON map, mapping each old icon name to one of the new icon names.

Old icons:

==================

arrow-back-circle

notifications-off

notifications-circle-sharp

bag-remove

stop-sharp

text

...

New icons:

==================

grid-dots-blank.svg

repeat02.svg

image-indent-right.svg

cloud-blank01.svg

bank-note02.svg

dots-grid.svg

...The results here were better, but still had key mismatches that made us skeptical of rolling out broadly, especially in places where the names weren’t specific, such as various types of arrows. Additionally, we struggled with hallucinations, even when tweaking the prompt to do one icon at a time.

The winning solution: CLIP embeddings

To address these issues, we realized we needed to incorporate visual information into our matching process. This led us to CLIP embeddings. CLIP (Contrastive Language-Image Pre-training), originally published by OpenAI, is a neural network that efficiently learns visual concepts from natural-language supervision.

Here's how we used CLIP to solve our icon migration challenge:

- Generating embeddings. We used the CLIP model to generate embeddings for both our old and new icon sets. These embeddings were based on a combination of the icon names and their visual content.

- Finding the best matches. With embeddings for all icons, we could then find the closest match in our new icon set for each old icon by comparing their embedding vectors.

- Creating a mapping. The result was a semantic mapping between our old and new icons, allowing us to automate much of the migration process.

The technical details

Luckily, there are plenty of open source CLIP models available. We used the dfn5b-clip-vit-h-14-384 CLIP model for this project, since it scores well on the open CLIP leaderboard and is available to run on Replicate, which made getting started trivial.

Here's a simplified version of our embedding generation code, generating an embedding for both the image and the name, and then combining them, 2:1 weighting the image content to the name.

const oldIcons = ["terminal-sharp", "help-outline", "duplicate-outline", /* ... */];

const newIcons = ["help-square", "globe01", "phone01", "folder-closed", /* ... */];

const oldIconEmbeddings = {};

const newIconEmbeddings = {};

// normalize a vector to unit length

const normalizeVector = (v) => {

const len = Math.sqrt(v.reduce((a, b) => a + b * b, 0));

return v.map((x) => x / len);

};

// get the embedding for an icon, combining visual and textual information

async function getIconEmbedding(iconName) {

// Get embeddings for both the icon image and the icon name

const [iconEmbedding, nameEmbeddings] = await Promise.all([

// Simplified, when actually running we batched into batches of 25 icons

// (each a 128px wide jpg) as that gave us the best inference times per icon.

replicate.run("aliakbarghayoori/dfn5b-clip-vit-h-14-384", {

input: {

texts: [],

image_urls: [`s3://../${iconName}.svg`],

},

}),

replicate.run("aliakbarghayoori/dfn5b-clip-vit-h-14-384", {

input: {

texts: [iconName],

image_urls: [],

},

}),

]);

// Combine and normalize the embeddings, 2:1 weight image to name

return normalizeVector(iconEmbedding[0].embedding.map((x, i) => x + 0.5 * nameEmbeddings[0].embedding[i]));

}

for (const oldIcon of oldIcons) {

oldIconEmbeddings[oldIcon] = await getIconEmbedding(oldIcon);

}

for (const newIcon of newIcons) {

newIconEmbeddings[newIcon] = await getIconEmbedding(newIcon);

}At this point, we have the embeddings for the old and new icons, so we can find the best match for each of our legacy icons.

const vectorDot = (a, b) => a.map((x, i) => x * b[i]).reduce((a, b) => a + b, 0);

const vectorLength = (v) => Math.sqrt(v.reduce((a, b) => a + b * b, 0));

const vectorCosineSimilarity = (a, b) => vectorDot(a, b) / (vectorLength(a) * vectorLength(b));

// Find the best matching new icon for each old icon

for (const oldIconName of Object.keys(oldIconEmbeddings)) {

let closestNewIconName = null;

let bestSimilarity = -Infinity;

// Compare the old icon embedding with each new icon embedding

for (const newIconName of Object.keys(newIconEmbeddings)) {

const similarity = vectorCosineSimilarity(oldIconEmbeddings[oldIconName], newIconEmbeddings[newIconName]);

// Update the closest match if this similarity is higher

if (similarity > bestSimilarity) {

bestSimilarity = similarity;

closestNewIconName = newIconName;

}

}

console.log(`${oldIconName} best match ${closestNewIconName} with a similarity of ${bestSimilarity.toFixed(4)}`);

}The results

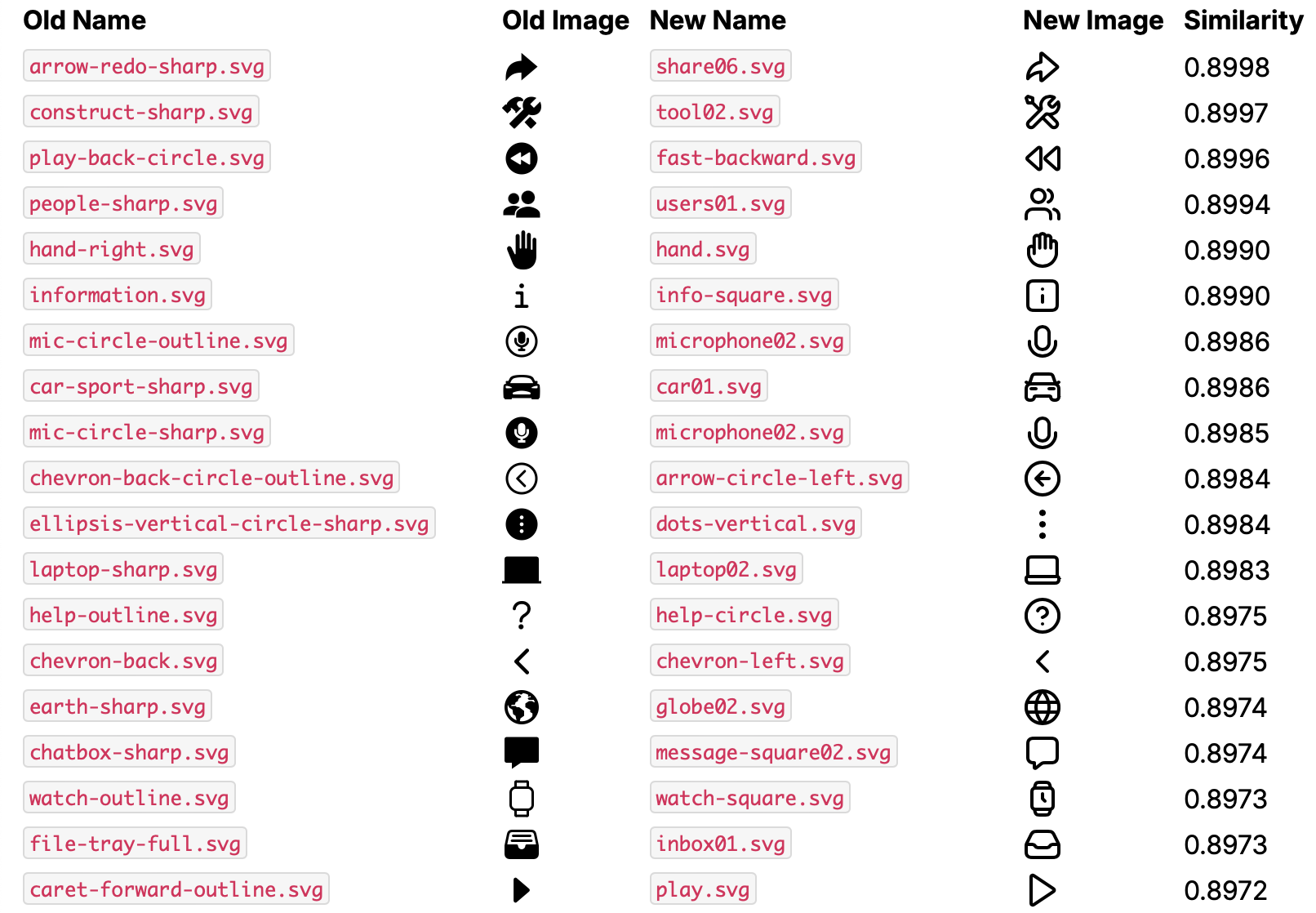

The CLIP-based approach yielded impressive results. It correctly matched icons even when their names were completely different, demonstrating an understanding of the visual similarities between icons.

For example, the old icon arrow-redo-sharp was correctly mapped to share06 in our new icon set. While the names are different, the visual representation is nearly identical—something that word-based matching would have missed entirely.

All in all, this project, done as a quick experiment in a few hours unblocked our product engineering teams from continuing the rollout of our new design system. This was made possible by 3 things:

- Availability of high-quality open-source CLIP models like

dfn5b-clip-vit-h-14-384 - The ability to quickly and easily get started with these models on cloud platforms like Replicate

- Iterative fast collaboration between our design and engineering teams

By leveraging these factors, we efficiently solved a complex design system migration challenge. This project not only addressed an immediate need but also gave us a fun opportunity to use our customers’ (Replicate and Anthropic) products to experiment with using AI in our design and development cycle. As we continue to grow, we're excited to explore more applications of AI and our customers’ products in our workflow, increasing our ability to evolve our product rapidly and effectively.

%25202%2520(1).png)